Create container images in a workspace

Categories:

Prior reading: Using the Workbench CLI from a container

Purpose: This document explains how to build Docker container images and push them to Artifact Registry to use in your Verily Workbench workspace.

Introduction

This article describes how you can build and use Docker container images from within a Verily Workbench workspace.

This capability can be useful in many scenarios. For example, you may want to define a workflow (e.g., using a framework like Nextflow or dsub) that includes step(s) which require you to create custom containers. As another example, you may want to create a notebook instance that is based on your own custom container.

This article walks through how you can build container images from your workspace using either Google Cloud Build or the Docker command-line tool, then push the container image to the Google Artifact Registry so that you can use it in your workspace.

Custom containers for Vertex AI Workbench notebook environments

You can create Workbench notebook environments by choosing from a set of pre-built Deep Learning VMs. If these don’t meet your needs, you can create a custom container image to use when creating a notebook environment, as described below.

Tip

A notebook custom container should use one of these Deep Learning container images as its base image. See this page and this for more information.Create an Artifact Registry repository

The Google Artifact Registry lets you store, manage, and secure your build artifacts. To get started, you’ll create an Artifact Registry repository in the Google project tied to your workspace.

-

First, create or set the workspace that you want to use. You can do this from the Workbench UI, or the command line as follows. (On your local machine you will first need to install the Workbench CLI.)

wb status wb auth login # if need be wb workspace listTo create a new workspace (replace

<workspace-name>with your workspace name):wb workspace create –name=<workspace-name>To set the Workbench CLI to use an existing workspace (replace

<workspace-id>with your workspace ID):wb workspace set –id=<workspace-id> -

View any existing Artifact Registry repositories for your workspace via:

wb gcloud artifacts repositories listYou can run this command either from a local machine where you’ve installed the Workbench CLI, or from a notebook environment in your workspace.

If you want to use an external repository, see Granting access to a private Artifact Registry repo in a separate Google project below.

-

Create a new Artifact Registry repository from the command line like this, first replacing

<your-repo-name>with the name of your new repo:wb gcloud artifacts repositories create <your-repo-name> --repository-format=docker \ --location=us-central1As above, you can run this command either from a local machine where you’ve installed the Workbench CLI, or from a notebook environment in your workspace.

Create a Dockerfile

Next, you’ll define (or obtain) a Dockerfile from which to build your container image.

Create a directory for your Dockerfile and any other build artifacts, then create the

Dockerfile. As a simple example for testing, you can create a Dockerfile that includes only the

following line:

FROM gcr.io/deeplearning-platform-release/tf2-cpu.2-12.py310

This example uses a Tensorflow Deep Learning container as its base, and doesn’t make any other modifications.

After you’ve defined your Dockerfile (and any other build artifacts), you can build and push your

container image via either Cloud Build or by running docker directly. Both are described below.

Use Cloud Build to build and push a Docker image to the Artifact Registry

Cloud Build can import source code from a variety of repositories or cloud storage spaces, execute a build to your specifications, and produce artifacts such as containers or code archives.

We can use Cloud Build to build a Docker image and push it to the workspace project’s Artifact Registry repository in one step, from the command-line or in a notebook environment. When you use Cloud Build, you’re doing the Docker build in the cloud, which can be particularly helpful when you have a compute-intensive build you want to launch from, say, an underpowered cloud environment.

-

Ensure that you have created a Workbench Cloud Storage bucket resource to use. If you haven’t done so yet, you can do so via the Workbench UI or from the command line like this:

wb resource create gcs-bucket --id=ws_files \ --description="Bucket for reports and provenance records."This command creates a bucket with the resource name:

ws_files. The following example assumes a bucket with this resource name. If you want to use a different bucket resource, you can see your existing bucket resource names via:wb resource list. -

Then, in the same directory as your

Dockerfile, run Cloud Build to build the container and push it to the Artifact Registry, first replacing<your-repo-name>in the following command:wb gcloud builds submit \ --timeout 2h --region=us-central1 \ --gcs-source-staging-dir \${WORKBENCH_ws_files}/cloudbuild_source \ --gcs-log-dir \${WORKBENCH_ws_files}/cloudbuild_logs \ --tag us-central1-docker.pkg.dev/\$GOOGLE_CLOUD_PROJECT/<your-repo-name>/test1:`date +'%Y%m%d'`Note that we’re running the command as

wb gcloud .... This “pass-through” allows us to use environment variables that will be set bywb. TheWORKBENCH_ws_filesenvironment variable tells Workbench to use the Cloud Storage bucket with resource namews_files. If you are using a bucket with a different resource name or a different region, change that info as well.$GOOGLE_CLOUD_PROJECTwill be set to the name of the project underlying your current Workbench workspace.

(This example command sets the container image name totest1and uses a date-based tag; you can edit those as well.)

Once your container image has been built and pushed, you can see it listed in the Google Cloud Artifact Registry here: https://console.cloud.google.com/artifacts. Click in to the repo and then your image name to see its details.

Use the docker tool from to build and push an image to the Artifact Registry

As an alternative to using Cloud Build, you can run docker directly. You can use

Docker from your

local machine if it’s installed, or from a Workbench notebook

environment (e.g., in a terminal window).

For example, to build a container image, run the

following command in the directory where your Dockerfile is, replacing <your-project-id>, <your-repo-name>, and <container-name:>:<tag> with your details. You can find your workspace’s project ID from the overview

page in the UI or via wb workspace describe, or from the GOOGLE_CLOUD_PROJECT environment variable in a

workspace notebook environment.

docker build -t us-central1-docker.pkg.dev/<your-project-id>/<your-repo-name>/<container-name>:<tag> .

To push your image to your Artifact Registry repository, you may need to first run the following command

(you only need to do this once for each Registry region, in this case us-central1):

gcloud auth configure-docker us-central1-docker.pkg.dev

Then, push your container image to the registry (again, replacing <your-project-id>, <your-repo-name>, and <container-name:>:<tag> with your details):

docker push us-central1-docker.pkg.dev/<your-project-id>/<your-repo-name>/<container-name>:<tag>

Create a container-based cloud environment with your new container image

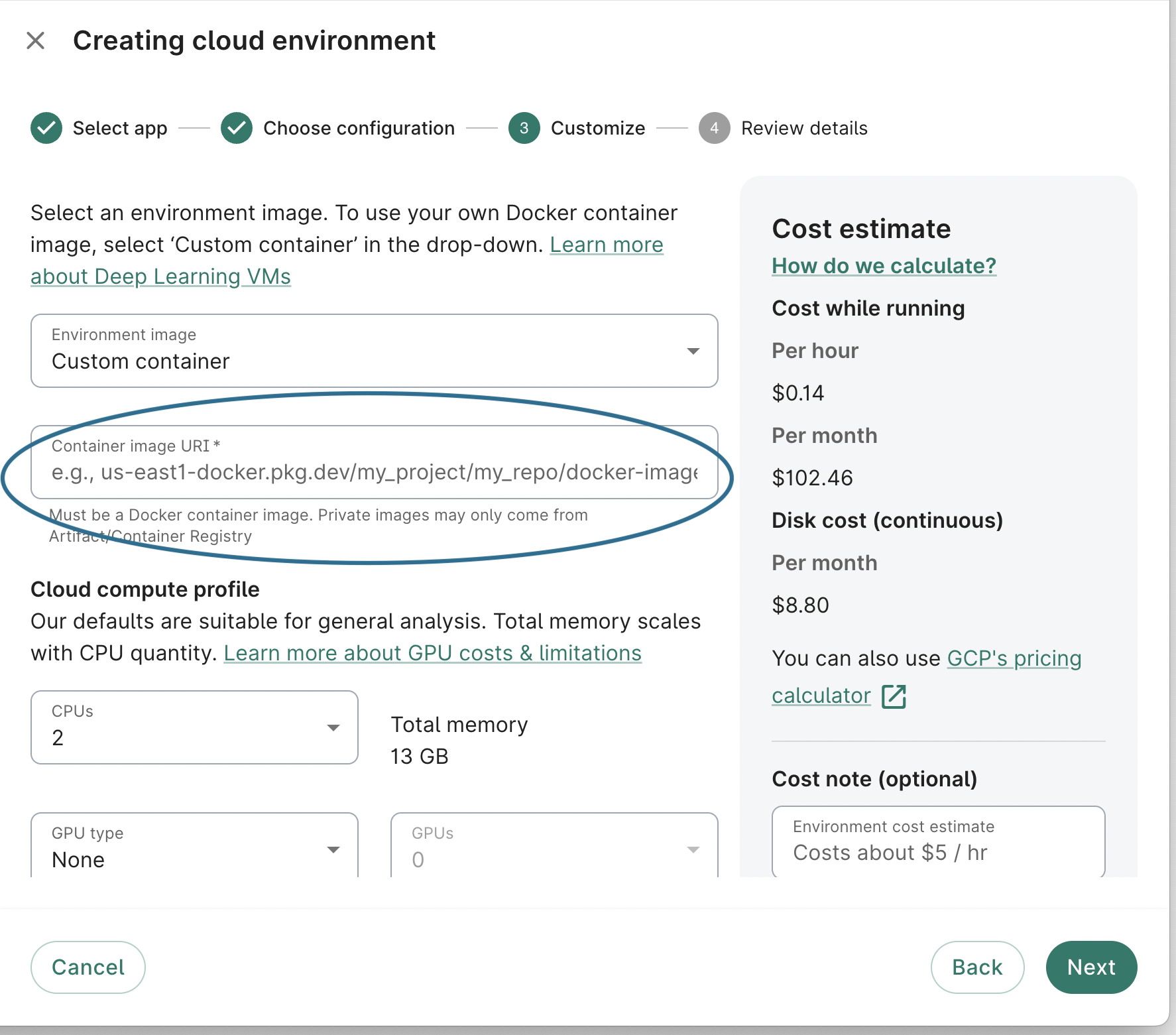

If your image is based on one of the Deep Learning container images (as in the example Dockerfile above), you can now create a Workbench cloud environment using that container image. You can do so via the Workbench UI:

Alternatively, you can create a custom container-based environment via the Workbench CLI like this (again, replacing

<your-repo-name> and <container-name:>:<tag> in the following command):

wb resource create gcp-notebook --id ctest --instance-id my-container-nb --machine-type=n1-standard-4 \

--location=us-central1-c \

--container-repository=us-central1-docker.pkg.dev/\$GOOGLE_CLOUD_PROJECT/<your-repo-name>/<container-name>:<tag>

Once the cloud environment comes up, you should be able to see it in the Workbench UI under

the workspace Environments tab and list it from the command line via wb resource list.

You can connect to the cloud environment via the link in the UI, or by running the following (replace <notebook_name> with your notebook’s name):

wb resource describe --id <notebook_name>

and then visiting the listed proxy-url.

Granting access to a private Artifact Registry repo in a separate Google project

You may at times want to read from or push to an Artifact Registry in a Google project not associated with your workspaces.

In order to use a private Artifact Registry repo from a separate Google project in your Workbench workspaces, you need to grant read access to the proxy group. The proxy group contains a group of service account emails of all of your Workbench workspaces.

-

Get the proxy group email for your workspace by running:

wb auth status -

Go to the GCP project with the Artifact Registry. Replace

<PROJECT_ID>with your project ID;<my-repo>with your Artifact Registry repository name;<us-central1>with the location of the repo; andproxy-group-emailwith the email from Step 1:gcloud config set project <PROJECT_ID> gcloud artifacts repositories add-iam-policy-binding <my-repo> \ --location=<us-central1> --member=group:<proxy-group-email>@verily-bvdp.com --role=roles/artifactregistry.reader

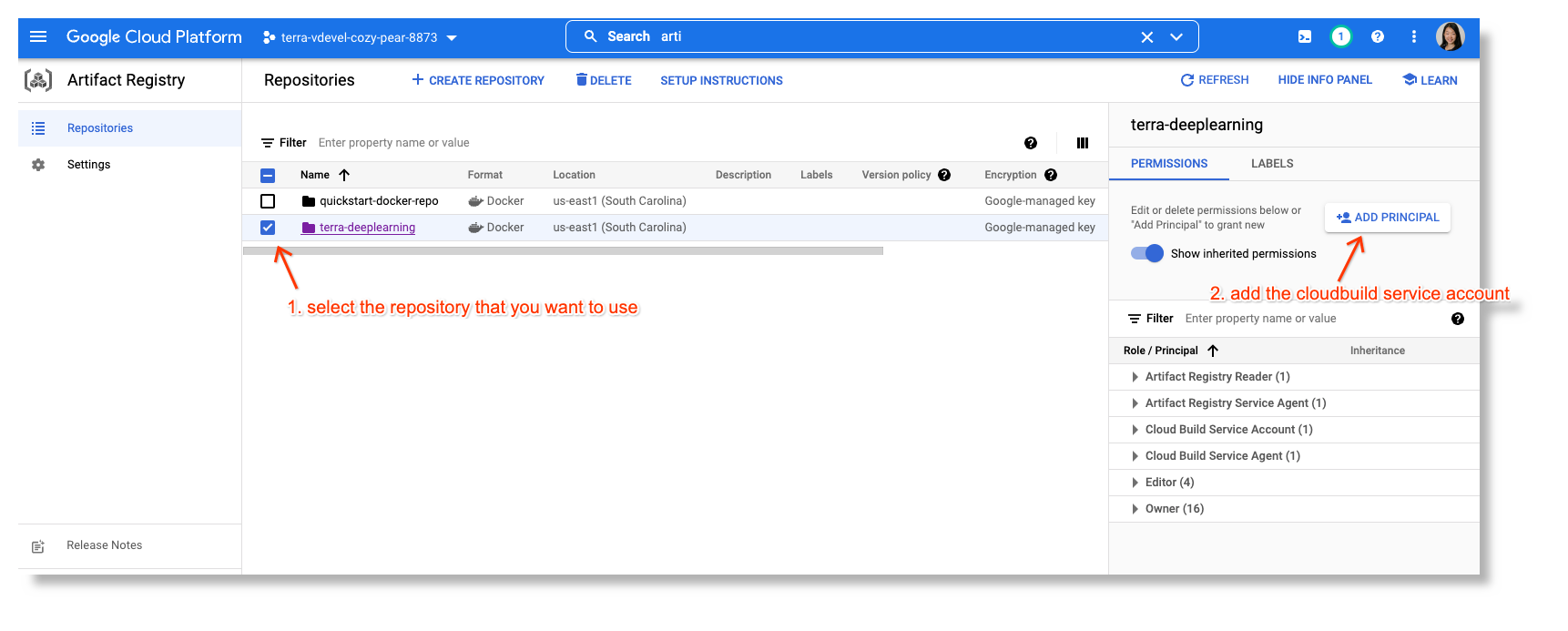

Grant the artifactregistry.writer role to the Cloud Build service account

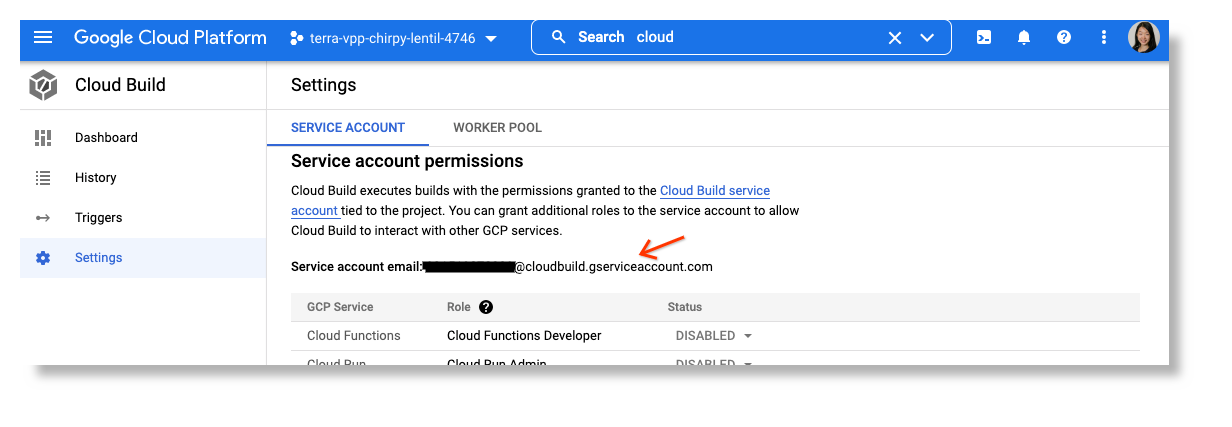

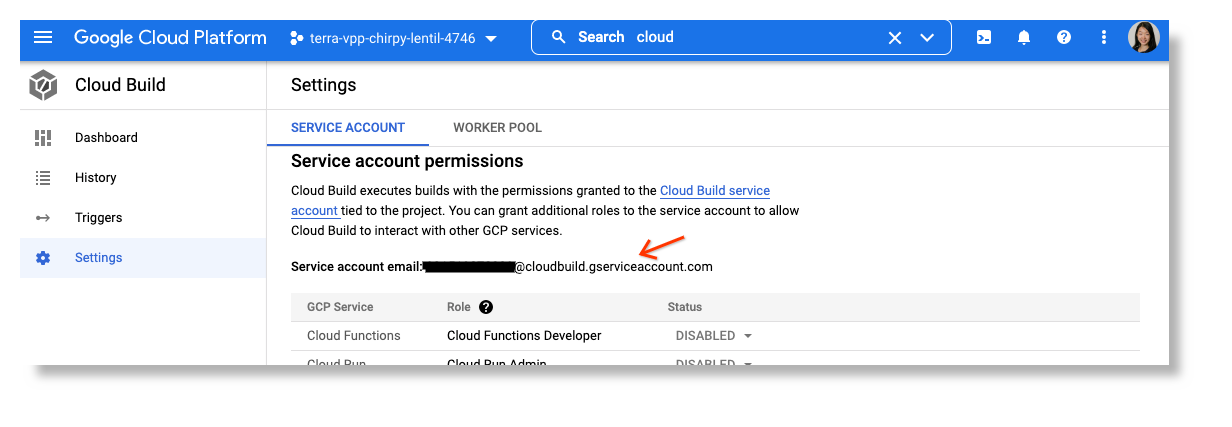

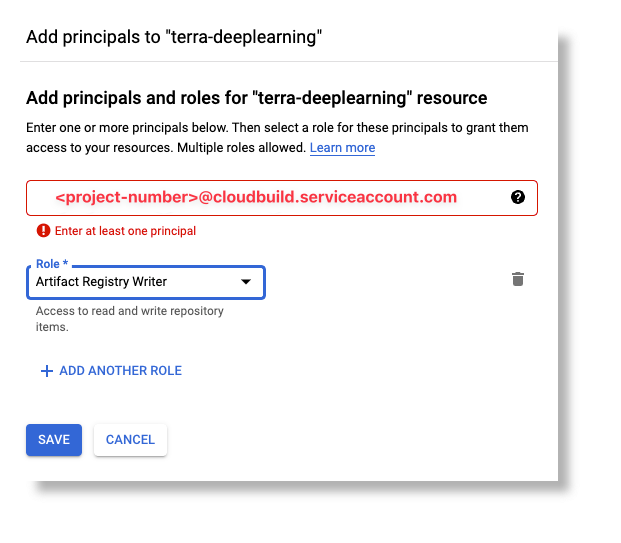

If you want to upload your images to an Artifact Registry outside of your workspace’s Google project, you need to grant the artifactregistry.writer role to the Cloud Build service account of the workspace. It’s in the format of <project-number>@cloudbuild.gserviceaccount.com and can be found in the GCP console > Cloud Build > Settings.

-

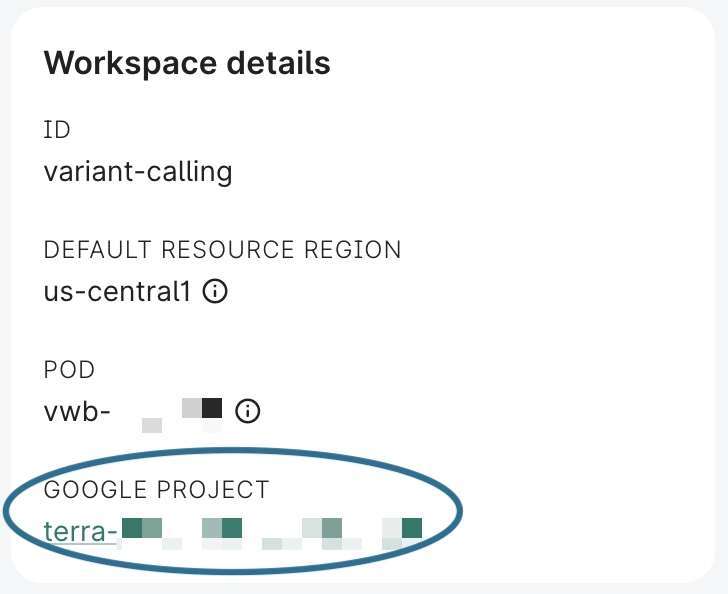

Go to the GCP console of the current workspace. Using the Workbench CLI, you can get the link to your project in the Cloud console by running:

wb statusFrom the UI, look for the Google project link under Workspace details:

-

Click on that link to visit the Google Cloud console for that project. In the Cloud console, go to the Cloud Build settings.

-

Get the Cloud Build service account, which should be in the form

<project-number>@cloudbuild.gserviceaccount.com:

-

Go to the Cloud console for the project containing the Artifact Registry that you want to use, and navigate to the Artifact Registry panel.

-

Select your Artifact Registry and click ADD PRINCIPAL:

-

Add the Cloud Build service account from your Workbench workspace with the

Artifact Registry Writerrole:

Additional Resources

GCP Cloud Build documentation: Quickstarts | Cloud Build Documentation

Last Modified: 4 October 2024